Structure Guided Image Inpainting using Edge Prediction

University of Ontario Institute of Technology

2000 Simcoe St. N., Oshawa ON L1G 0C5

Abstract

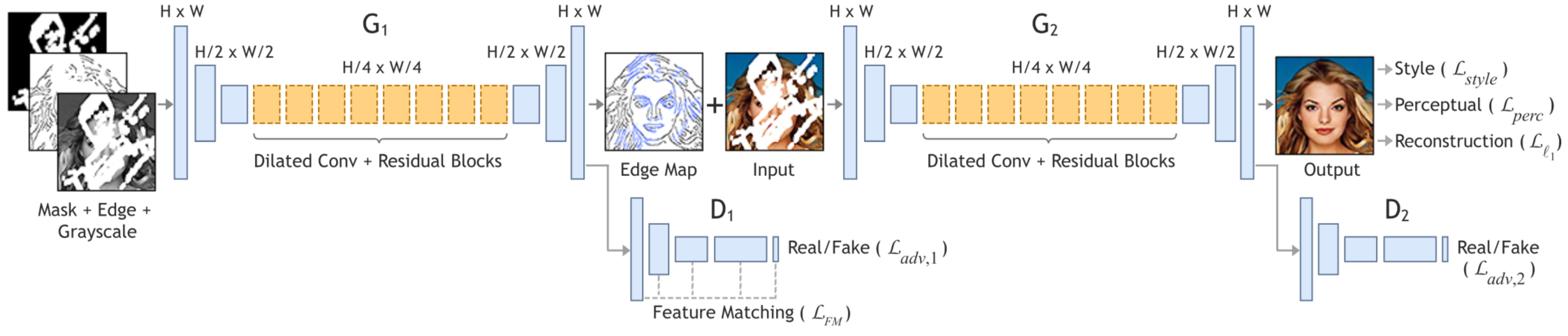

We propose a two-stage model that separates the inpainting problem into structure prediction and image completion. Similar to sketch art, our model first predicts the image structure of the missing region in the form of edge maps. Predicted edge maps are passed to the second stage to guide the inpainting process. We evaluate our model end- to-end over publicly available datasets CelebA, CelebHQ, Places2, and Paris StreetView on images up to a resolution of 512 x 512. We demonstrate that this approach outper- forms current state-of-the-art techniques quantitatively and qualitatively.

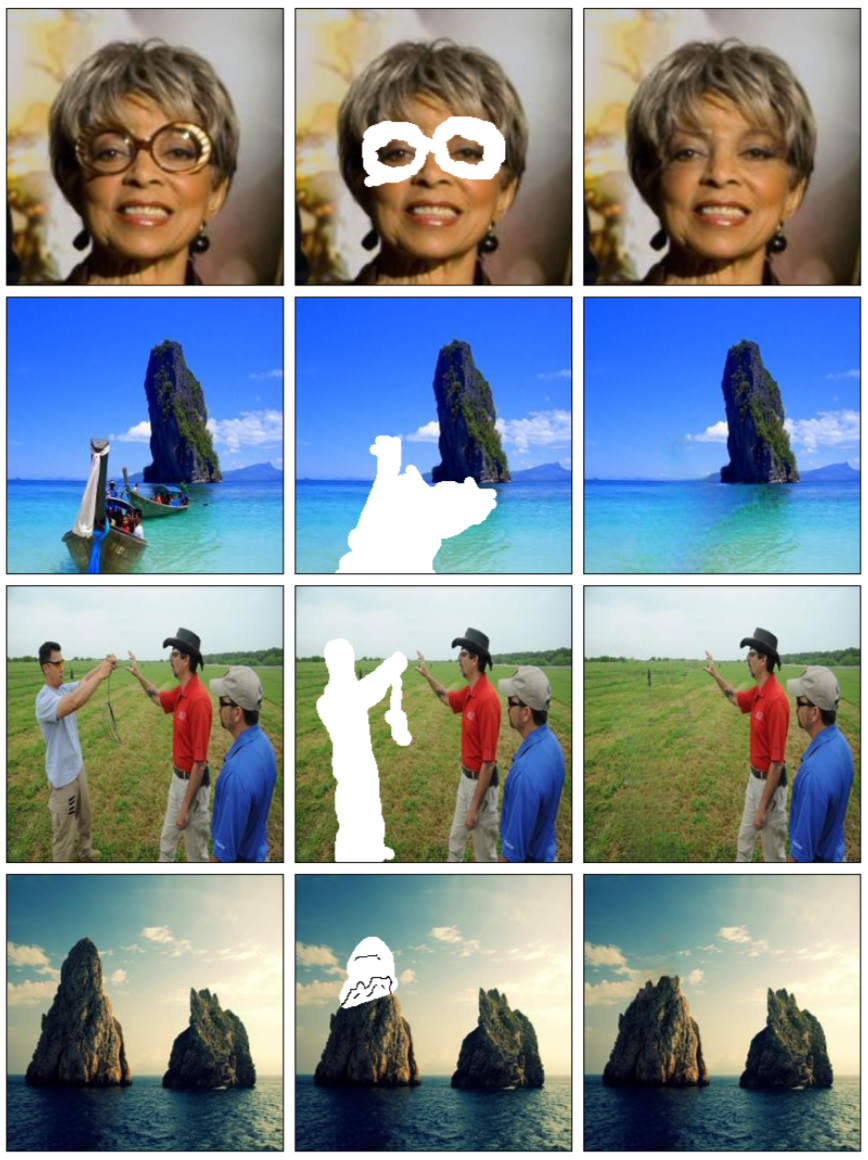

Some results

The proposed network is able to use color and edge information from different sources to create unique images.

The proposed method can also be used for photo editing as seen below.

Supplementary Material

More information is available here.

Publication

For technical details please look at the following publications